How we built notification rate limiter for eight billion notifications per day for 400 Million Monthly Active Users

Written By - Akshit Verma and Ayush Gupta

Additional Credits

Product: Nishant Tyagi, Shubham Khandelwal, Maanav Doshi, Priyanka Reddy Daggula.

Engineering: Geetish Nayak, Mohammed Farhan, Paras Vishnoi.

Introduction

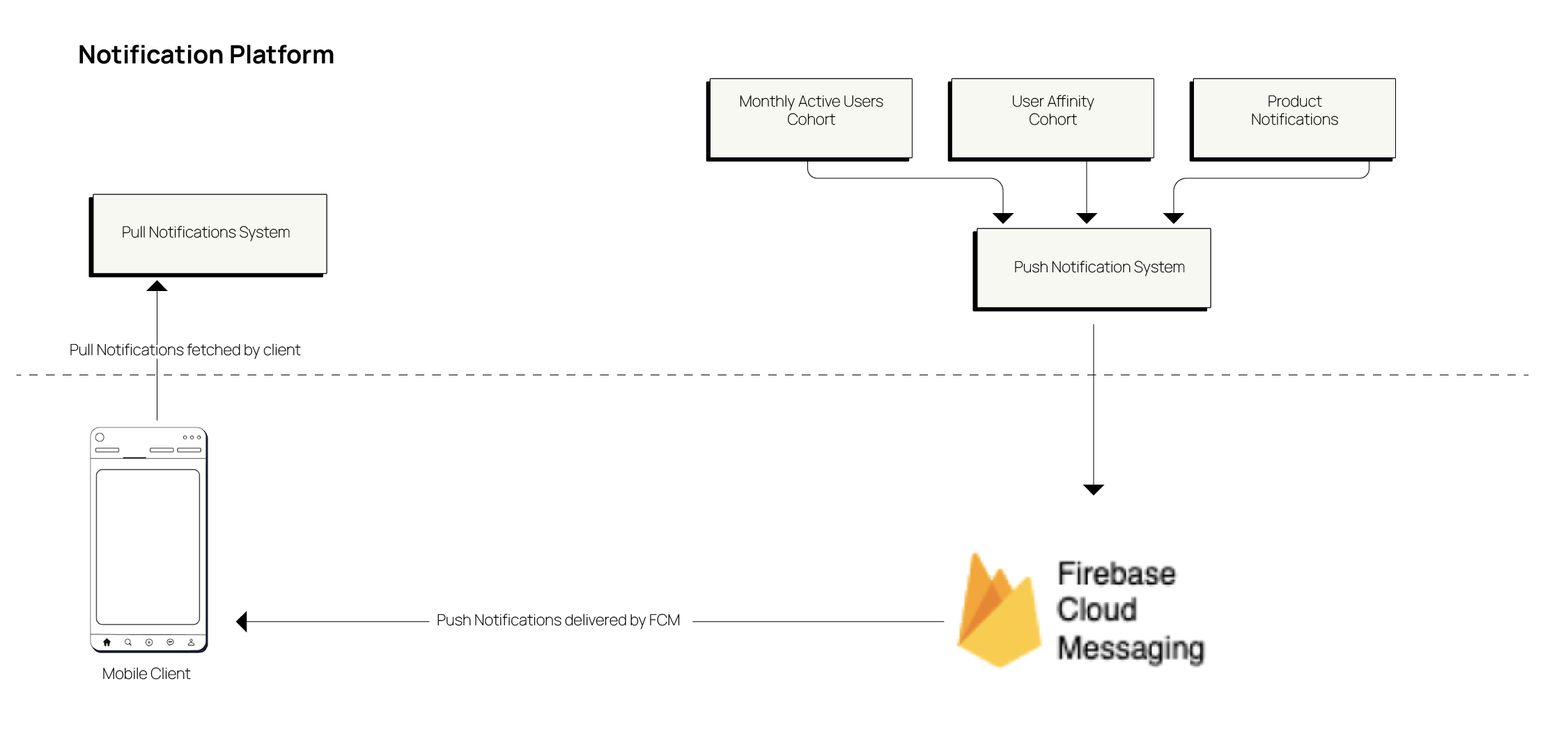

We send around eight billion notifications daily to a user base of 400 Million users combining ShareChat and Moj. This includes pull and push-based notifications, under various notification types [Glossary 1].

Why is it required?

Notifications at ShareChat bring ~18% of DAU. With different teams chasing different OKRs, notifications not only focus on increasing the DAU but are used by each team to achieve their business goals.

Hence, there is an incentive for each team to increase the number of notifications to bring more users to the app. While it might give incremental diminishing returns for the individual teams, the overall increase in notifications disturbs the customer experience and could lead to user annoyance. It eventually leads to users blocking/turning off the notifications.

To tackle this problem, we need to limit the notification per type, per day for each user. Also, the limit per user has to be decided based on user activity or preferences.

Designing Rate Limiter

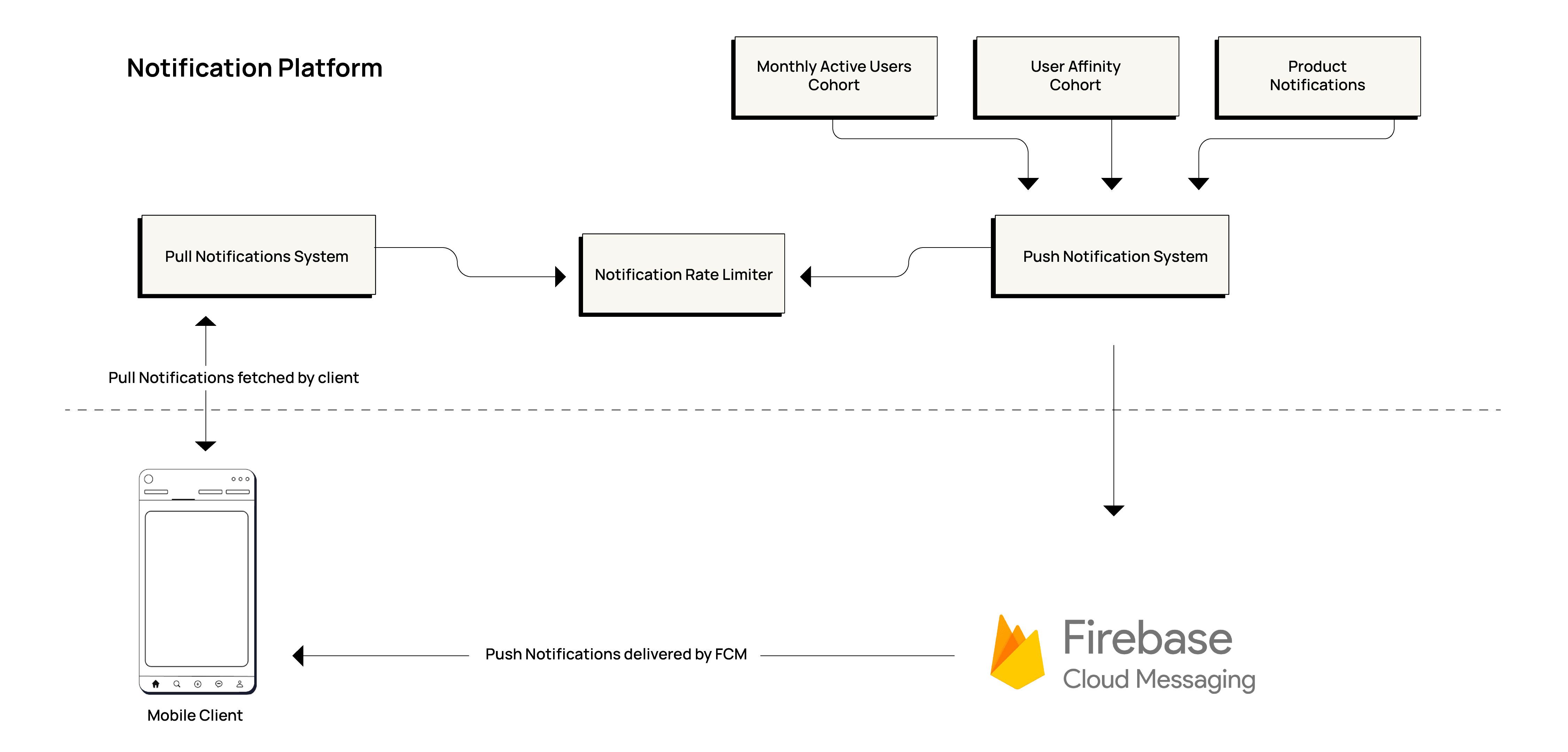

A Notifications volume controlling platform functionality named Notification Rate Limiter was built to solve the below-mentioned problem.

Challenges

- Build notification limit functionality for users per day per notification type [Glossary 1]. The count of users is around 400 million. The notification type is around 15.

- Should support user-personalized notification limits. This personalization is based on user preferences, his last activity, etc

- As users could have multiple devices, notification limits have to be applied on the user device level.

- While sending notifications, the peak requests could reach up to 1 million rps.

- We use pubsub as a messaging queue while sending push-based notifications. Pubsub provides the guarantee of at least one delivery. Due to this, there could be scenarios when the same message gets repeated multiple times. So, rate limiters should handle duplicate notifications for a user's device.

High Level Overview

We enabled notification rate limiting in both push and pull-based notifications.

Choosing rate limiter type

Counter vs Event-Based Rate Limiter

In a counter-based approach, we can maintain a counter for per day, per user Id and deviceId for each notification type. The problem with this approach was the increasing counter is not idempotent, which means if for a notification we increase the counter by one and if the same notification is duplicated then it would increase the counter again.

To resolve this problem, we used an event-based approach. In which we will be storing events corresponding to a notification sent to a user device. In this event, the notification id will serve as a unique identifier(idempotency key) and would prevent duplication as it would be unique for all notification events for a user device.

Also, as needed to apply a limit per day for a user device we decided to use a fixed window rate limiter.

So, we are using a combination of both fixed window rate limiting and event-based paradigms.

Choosing the database

Requirements for the database -

- Writes and reads were going to be very high(400K reads per second + 400K writes per second) total expected RPS is 1 million

- We did not require strong consistency, if data would be eventually consistent within a few milliseconds, then it is fine for our case

- We needed low latency(upto 1-5 ms)

- The database should be able to handle our current scale and should be easily scalable for more load in future

- Should be highly available

- Should be partition-tolerant

These requirements were fulfilled by ScyllaDB which is a NoSQL columnar store.

It provided the following advantages over other solutions.

- ScyllaDB is architected for data-intensive applications that require high scale and low latency

- Is highly available and partition-tolerant in the CAP theorem

- Costs lesser than other cloud-managed solutions available like a cache, or other NoSQL databases. → https://www.scylladb.com/scylla-vs-cassandra/

- Is easily scalable, need to just add more nodes to increase throughput or add more disk storage to increase storage.

- The query pattern by which we need to access data can be easily created in Scylla.

- We did not require any joins

- It provides TTL on rows, this would help in removing older records implicitly.

Design & Implementation

Designing ScyllaDB schema

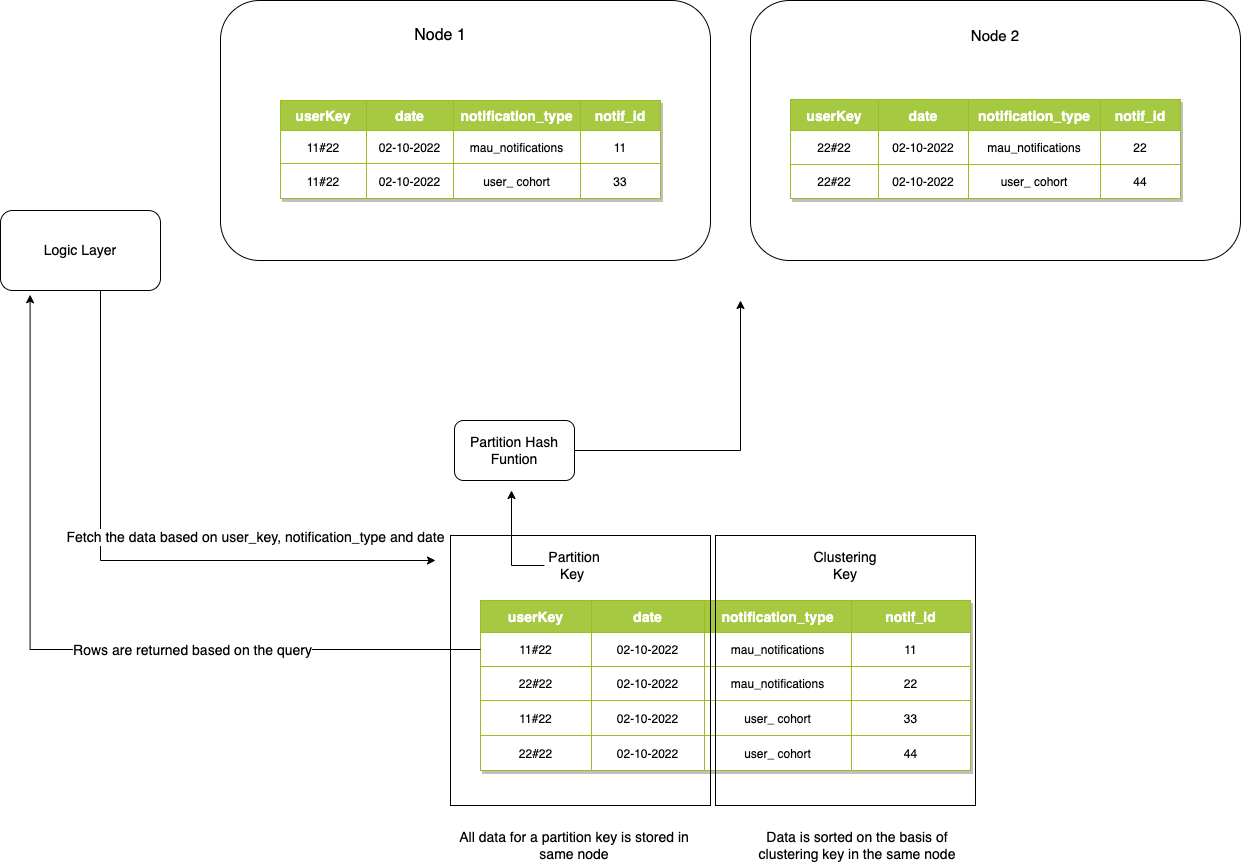

1. Primary key is comprised of the partition key and clustering key

2. All the data for the partition key is stored in the same node.

3. Data is sorted based on the clustering key.

4. We wanted to fetch the counts of notifications sent for the user's device for a particular date. Also, the data needs to be sorted on notification type for the user to efficiently query the data.

5. Need to utilize notification id to uniquely identify the notification send for a notification type to a user device. This is done to prevent duplicate entries of the same notification from falsely impacting the notification send count.

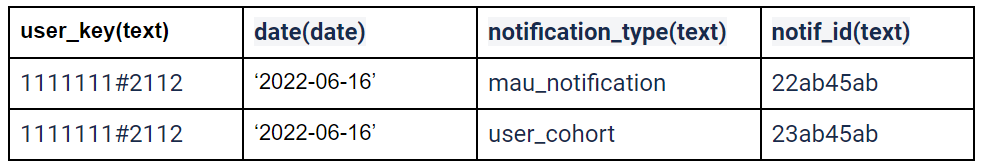

Utilizing these points we designed the ScyllaDB schema as follows.

user_key: combination of user id and device id, as the user can have multiple devices

date: today’s date

notif_id: idempotency key

notification_type: notification type like mau_notification, user_cohort

Partition Key: (user_key, date)

Clustering Key: (notification_type, notif_id)

We have set a TTL of 2 days for a record in order to implicitly delete older records.

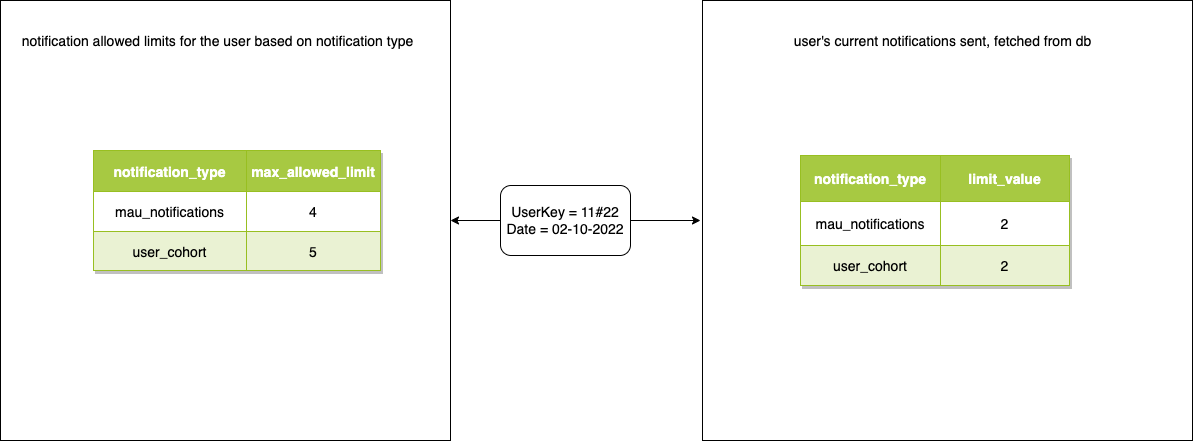

How do we find and update notification limits while sending notifications?

- Fetch Records from DB on the basis of user_key, date(Partition key), and group them on notification_type to get the count for each notification_type.

- Check if the notification limits are exhausted by comparing the count of notification_type (i.e. the total number of notifications sent to the user's device) and the notification limit for the user.

- If the limits are exhausted then the notification is blocked for the user.

- If the limits are not exhausted then insert record and then send the notification to the user.

Max notification types can be up to 15 and we fetch all the notifications present in the DB for a particular userKey.

Why is our solution fast at a huge scale?

- The data for the partition key is stored in the same node. In our design, as the user device is chosen as the partition key all the notification limit data for a user device is stored on the same node. This helps in improving the efficiency of the queries.

- To make requests to DB we leverage goroutines in GO to read and write in the DB, providing high parallelism.

Scope of Modifications

Since our requirement was just to create a rate limiter for a day, we did not store the timestamp in our table, but if a solution is required for minute-level, hour-level rate limiting, then the timestamp could be added in the clustering key and this solution can be converted to a sliding window rate limiter.

Glossary

- Notification Type - Different types of notifications are being sent like MAU notifications, user affinity-based notifications[Glossary 2], product notifications[Glossary 3], etc, total can be up to 15 different types.

- User Affinity-Based Notification - This includes notifications based on user cohort with affinity to a genre like jokes, movies, etc.

- Product Notification - This includes notifications such as like, share, comment, etc notifications.

- Push Notification System - Notifications being sent from the backend to the client via FCM token

- Pull Notification System - Client requesting notifications from the backend via an API

- ScyllaDB Architecture