ShareChat's Livestream: Where Creativity Meets Community

In the bustling digital landscape of social media, ShareChat's Livestream stands out as a powerhouse revolutionizing creator-audience connections. More than just a streaming platform, it's an immersive ecosystem where entertainment meets monetization, and genuine connections flourish.

Creators can host engaging solo sessions, collaborate with fellow artists, and participate in high-energy creator battles that keep audiences engaged. What truly sets ShareChat's Livestream apart is its robust monetization ecosystem - from virtual gifts during livestreams to exclusive one-on-one calls, every interaction creates value. This turns entertainment into a sustainable career path for creators while offering viewers an immersive way to support and connect with their favorite personalities.

Our streaming infrastructure currently operates on two key technologies: WebRTC(Web Real Time Communication) for high-quality, real-time interactions, and HLS (HTTP Live Streaming) delivered through CDNs for our low ARPU users

While we've historically relied on external vendors for RTMP(RTMP is then converted to HLS), we're now building this capability in-house. This strategic shift offers two significant advantages:

First, it significantly reduces operational expenses by eliminating third-party conversion fees. Second, owning our HLS conversion pipeline gives us direct access to raw video and audio frames from the source, enabling seamless integration with our content monitoring systems. This enhanced capability strengthens our ability to enforce community standards in real-time during streaming.

Discovering GStreamer: Our Path to Streaming Innovation

In our search for a robust in-house streaming solution, we found GStreamer - an open-source multimedia framework that exceeded our expectations. Its extensive plugin ecosystem and ability to build custom pipelines through code made it stand out. After thorough proof-of-concept testing, we confirmed that GStreamer could not only handle our RTMP/HLS conversion needs but also integrate seamlessly with livestream realtime moderation and open up other possibilities, allowing us to build a unified, efficient pipeline for multiple purposes.

Architecture

We wanted to make sure that the solution was

vendor agnostic: ShareChat uses third-party(multiple of them) for WebRTC and we wanted to make sure that the HLS conversion was vendor agnostic. We only want to consume raw video(yuv) and audio(pcm) frames, signals(video-on, video-off, creator-joined etc) for our pipeline

extensible: We not only wanted to build HLS pipeline via this solution but also extend it to moderation/recording and other areas easily

Before we delve into details further, let us first understand basics of GStreamer

Element: A GStreamer Element is the most fundamental building block in GStreamer - think of it as a single operation in your media pipeline. For example, one element might decode video, another might resize it, and another might encode it. Just like LEGO blocks that connect together to build something bigger, these elements can be linked to create a complete media processing chain

Pad: Think of Pads as the communication ports of a GStreamer Element - they're how data flows between elements, similar to how water flows through pipes. Each pad has a specific direction and purpose:

Source Pads (or "Src Pads"):

- These are output ports that push data out of an element

- Think of them as the exit point where processed data leaves

- They're often called "downstream" because data flows down to the next element

Sink Pads (or "Sink Pads"):

- These are input ports that receive data into an element

- Think of them as entry points where data comes in for processing

- They're called "upstream" as they receive data from previous elements

Adding and removing Pads emits signals(pad_added, pad_removed) against which you can write handler functions – this is one of the most important things that we act upon.

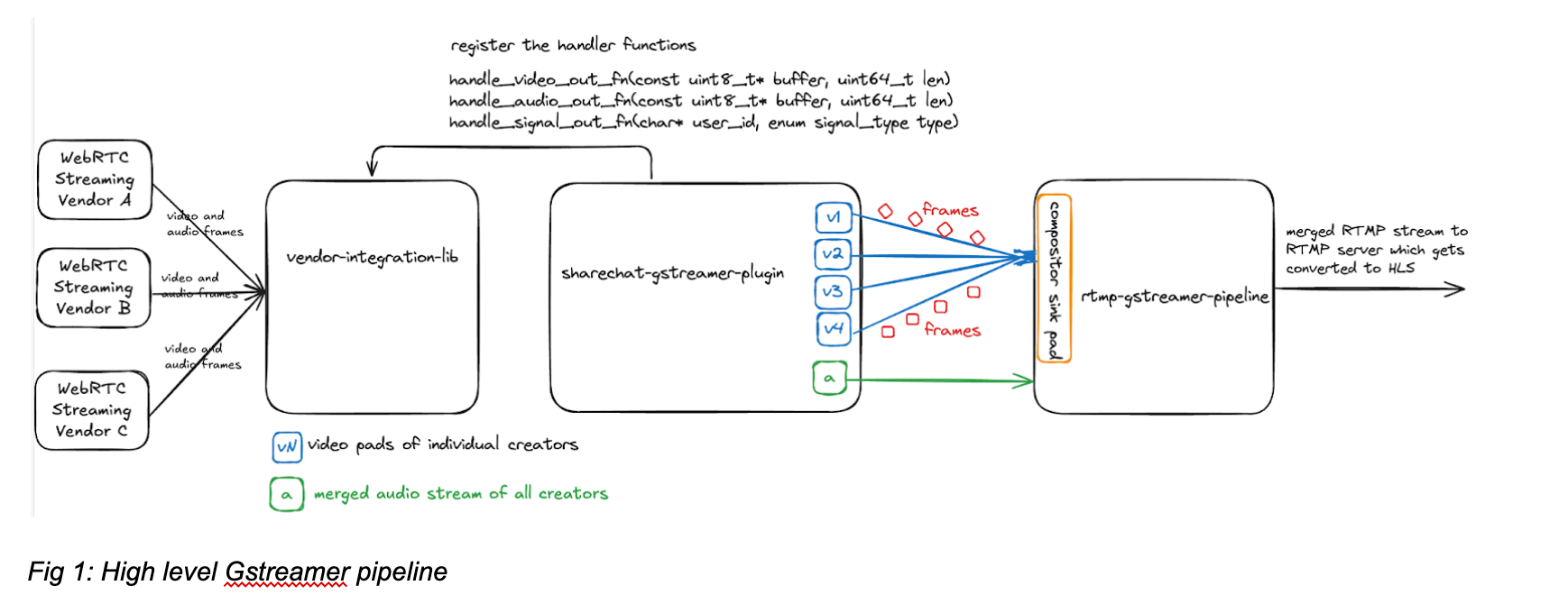

Since we have covered some basics of GStreamer, let us delve into details. We divided the solution to 3 different libraries in C/C++

Lets look into each of them

1. vendor-integration-library

- integrate with various media-streaming vendors

- get individual creators audio and video frames when creator goes live

- get signals of a creator such as video-on, video-off, audio-on, creator-left, creator-joined etc

2. sharechat-gstreamer-plugin

- handler functions are defined here which are then registered against vendor-integration-library

- new src audio and video pads are created when appropriate signals are received from vendor-integration-library via the handler functions

- raw audio and video frames received from the vendor-integration-library are converted into GStremer specific frames and then sent to the new src audio and video pads created above

3. rtmp-gstreamer-pipeline

- merging, synchronization and customizations of audio and video streams coming from sharechat-gstreamer-plugin

- merged RTMP(Real Time Messaging Protocol) stream is then sent to an external RTMP server which converts to HLS

In this blog we will focus more deeply on the rtmp-gstreamer-pipeline as this is where most of the magic happens

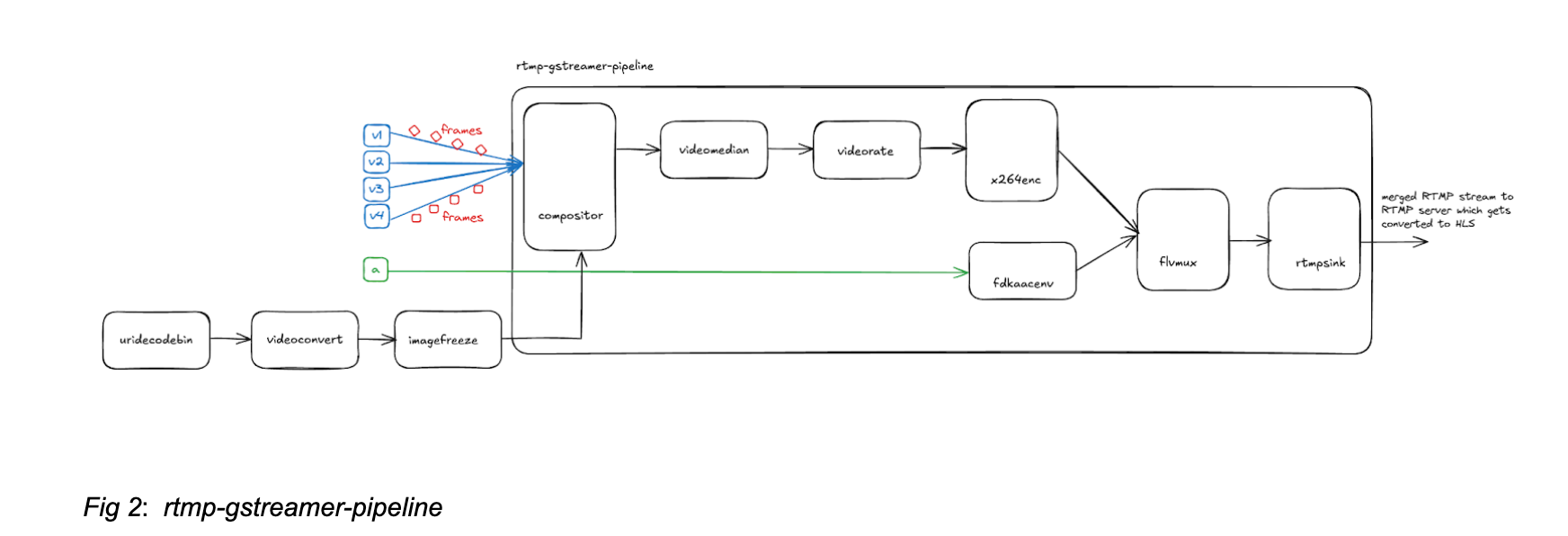

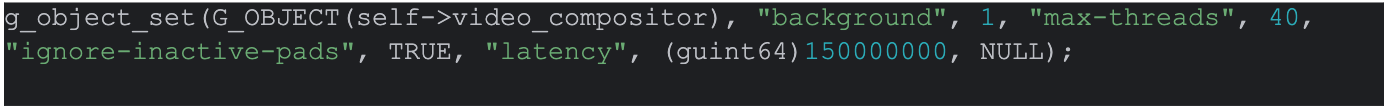

a. compositor

A compositor in GStreamer is an element that combines multiple video streams into a single output stream. We in livestream have multiple video streams which need to be shown together and compositor helps in combining them together

One of the very crucial things it helps in is

synchronization : video streams that are coming from different sources at the end need to be synchronized. The latency property in the compositor helps in achieving this. At its core, latency is like a waiting room for video frames. Before mixing video streams together, the compositor creates a small time buffer that allows it to organize and synchronize incoming frames properly. Think of it as giving your video streams a brief moment to "catch their breath" before they perform together.

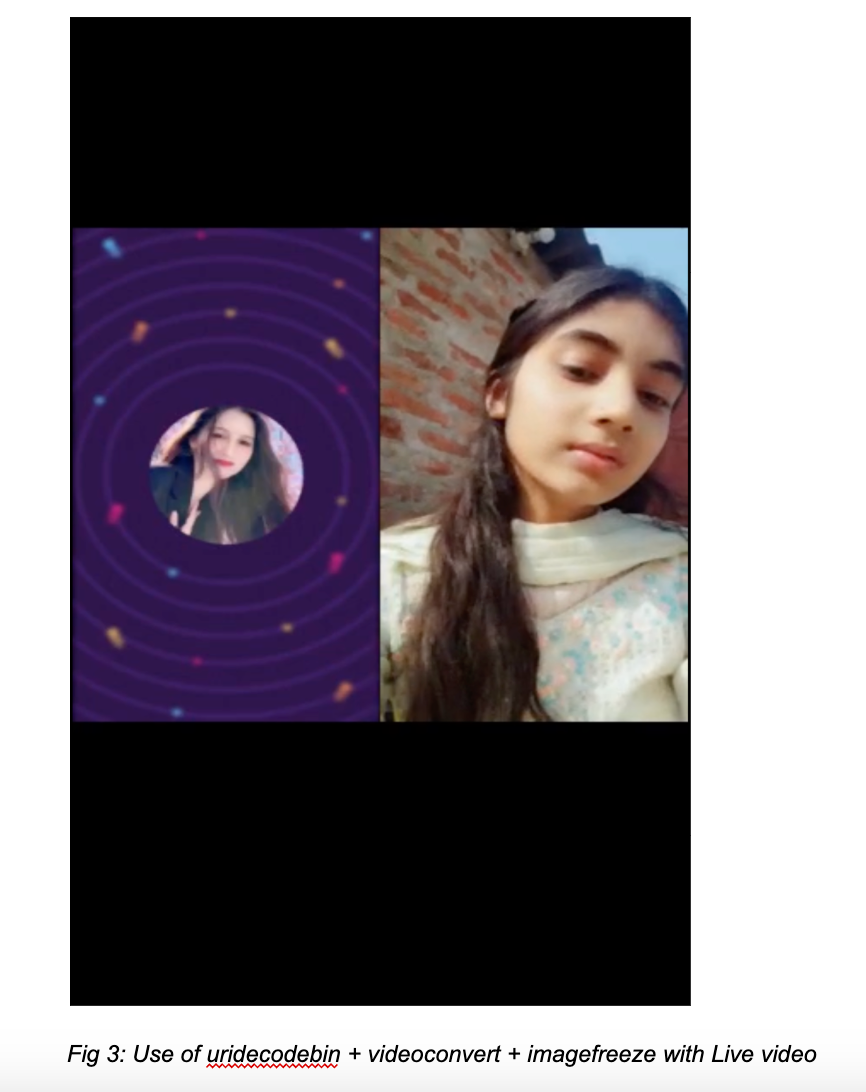

We have a custom requirement that if a creator has switched off their video, then we should be able to show their profile pic as a still image and this is where uridecodebin, videoconvert and imagefreeze plugin of Gstreamer comes handy

b. x264enc

Think of x264 as a sophisticated video compression engine that transforms raw video data into highly efficient H.264/AVC streams. It's the powerhouse behind much of today's video streaming, expertly balancing visual quality with file size through advanced algorithms and intelligent compression techniques.

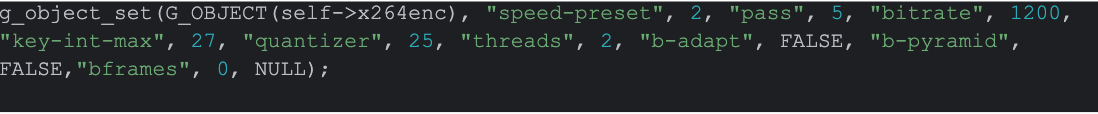

There are some important properties that we make use of. Let us deep dive into them

speed-preset

The speed-preset parameter determines the tradeoff between encoding speed and CPU usage. A lower preset value results in faster encoding but higher CPU consumption. Through testing, we found that speed-preset = 2(superfast) achieves an optimal balance between CPU usage and video bitrate for our use case.

pass and quantizer

We use Variable Bitrate (VBR) encoding, which adjusts the output bitrate based on scene complexity:

- High Complexity Scenes: Higher bitrate for better quality.

- Low Complexity Scenes: Lower bitrate to save bandwidth.

The quantizer parameter is also tuned to control bitrate. A higher quantizer value reduces bitrate and quality, but we found that quantizer = 25 delivers sufficient quality with imperceptible detail loss, especially for mobile viewers.

key-int-max

Keyframes (I-frames) are crucial for decoding, as they contain the full frame data. The key-int-max value determines how often these frames are generated:

- At 15 FPS, key-int-max = 30 results in an I-frame every 2 seconds.

- This configuration minimizes the initial playback delay for HLS players while maintaining a reasonable balance between bitrate and bandwidth consumption.

- Reducing key-int-max further would increase the bitrate (due to more frequent I-frames), which is undesirable for bandwidth-constrained users.

bframes

B-frames (Bidirectional predicted frames) are video compression frames that reference both previous and future frames to achieve higher compression ratios by storing only the differences between frames

By setting bframes = 0, we eliminate B-frames, which simplifies encoding and significantly improves decoding performance on user devices

c. flvmux

In our pipeline, audio streams are encoded using the fdkaacenc plugin, and the encoded audio is then combined with video streams using the flvmux plugin.

Learnings

GStreamer is an extremely powerful and battletested framework but building dynamic pipelines is challenging and requires deep expertise

- GStreamer has a steep learning curve specially when it comes to creating your own custom elements

- Documentation even though it is good, you always have to refer to the source code on the side to get some additional insights or understanding non-documented properties of an element(especially properties of parent Element)

- Finding the root cause of issues requires good amount of multiple iterations because sometimes edge cases would not be easily reproducible

- You have to try out various properties of an element because understanding them and tweaking them helps you optimize your CPU and memory utilization

- Debugging synchronization issues can turn out to be fairly verbose and complex as different elements(compositor, flvmux) in the pipeline handle synchronization in different ways

Role of Workflow Engine

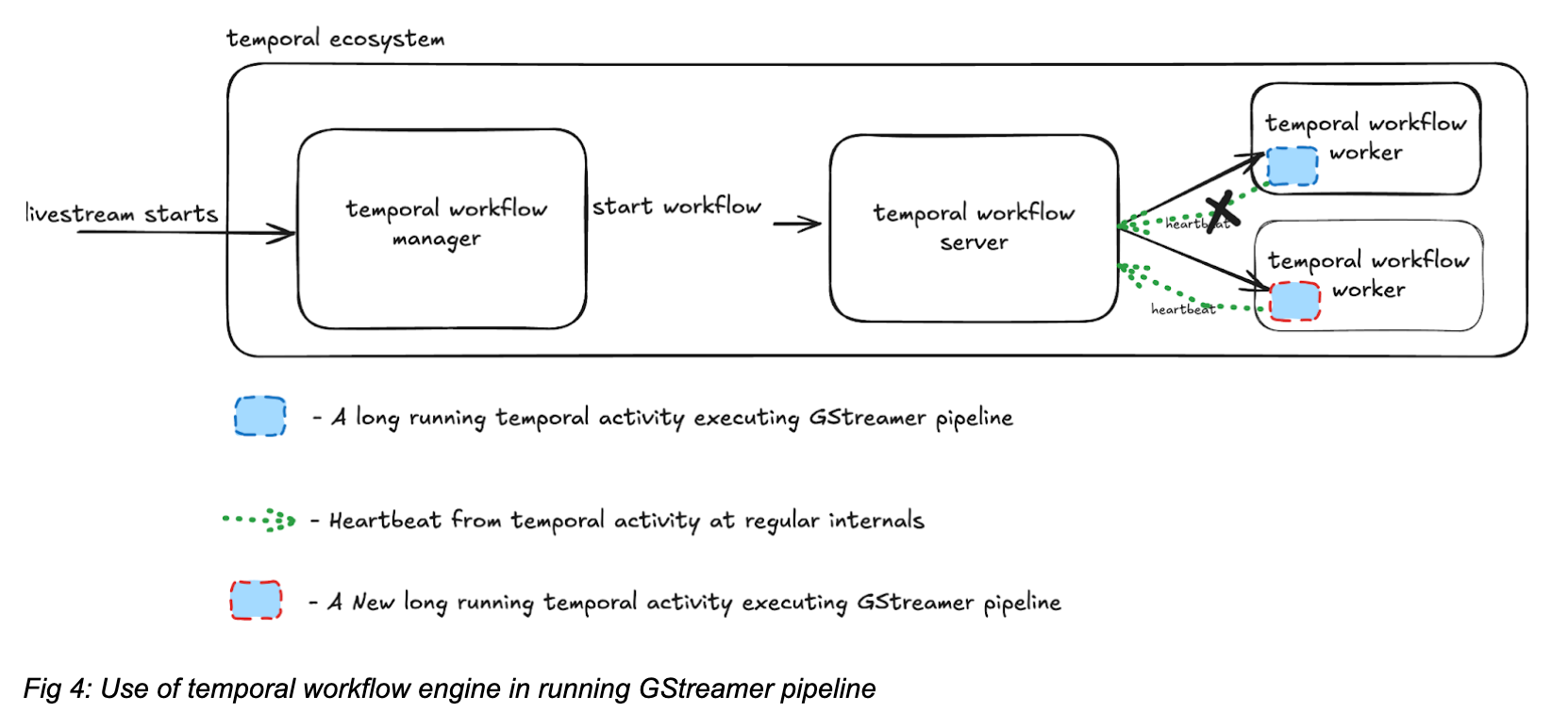

Now that we have discussed the GStreamer pipeline, it is important that the pipeline is always highly available as the HLS stream needs to be served in an uninterrupted manner. For this we have made use of temporal workflow engine.

When a livestream starts, we create a workflow which then gets scheduled on a worker node. The workflow consists of a long running activity which executes the Gstreamer pipeline and keeps sending heartbeat at regular intervals to the temporal server. If the heartbeat for various reasons stop coming, the temporal workflow server then schedules the long running activity onto a different node so that there is minimal interruption to the stream

What is next ?

We are now planning to merge our livestream realtime moderation pipeline in the same pipeline which will elaborated in Part-2 of the blog.